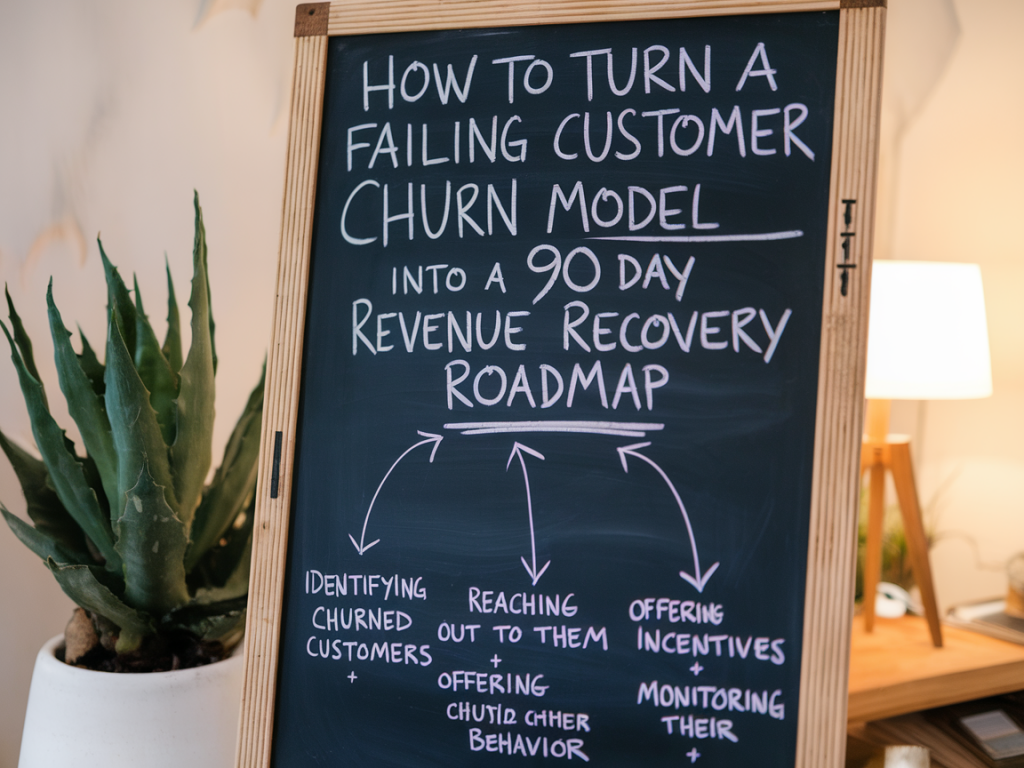

I once inherited a churn model that looked promising on paper but was quietly failing our business: false positives flagged loyal customers as flight risks, thresholds were static, and the playbook for outreach was a one-size-fits-all email. Within ninety days we turned that mess into a reproducible revenue-recovery roadmap that reduced churn, increased lifetime value, and gave the product and marketing teams a deterministic set of experiments to run. Below I share the exact approach I used—practical, prioritized, and built to produce measurable results fast.

Diagnose before you optimize

The first mistake teams make is trying to fix the model before understanding why it fails. I spent the first week running targeted diagnostics:

These checks revealed that the model wasn’t the only problem—data quality, business context, and operational gaps were bigger contributors.

Define clear, measurable objectives

I mapped business goals to model outcomes so every action had a KPI. Typical objectives I set:

Clarity on objectives keeps the team aligned and prevents "optimizing for model metrics" that don’t move the business needle.

Build a 90-day prioritised roadmap

Here’s the framework I used to sequence work into high-impact, short-cycle experiments. I split the 90 days into Learn, Iterate, Scale phases.

| Day range | Focus | Key outcomes |

|---|---|---|

| 0–14 | Diagnosis & quick wins | Clean labels, baseline KPIs, low-cost retention plays (e.g., targeted offers) |

| 15–45 | Model repair & segmentation | Improved predictions for priority cohorts, A/B test designs |

| 46–75 | Test interventions | Validated retention playbooks and ROI per channel |

| 76–90 | Scale & handoff | Automated workflows, measurement dashboard, ops handoff |

Immediate "quick wins" in the first two weeks

To buy time and show momentum, I deployed quick wins that require minimal modeling work:

These moves reduced near-term revenue leakage and created breathing room for deeper changes.

Repair the model with the right lens

My model fix focused on three improvements:

I used LightGBM for speed and interpretability initially, then built SHAP explanations to justify why individual customers were flagged. That transparency made sales and support teams comfortable executing the playbook.

Design interventions as measurable experiments

Every outreach or product change must be an experiment with a hypothesis, metric, and control. Examples I ran:

I prioritized using an A/B framework and tagged users so we could trace causal impact on revenue, not just clicks.

Operationalize and onboard stakeholders

An accurate model is useless if it’s not operationalized. I put in place:

Getting stakeholders to trust the model required showing incremental wins and explaining trade-offs in plain language. The SHAP visuals were invaluable during these conversations.

Measure ROI and iterate fast

Track both customer-level and portfolio-level KPIs:

After each 7–14 day experiment window, I recalibrated thresholds and reallocated budget to the highest-yield interventions. Some plays that looked good qualitatively failed when measured on revenue—those were cut quickly.

Scale what works, sunset what doesn’t

By day 75 we had a validated set of interventions and a retrained model with much higher precision for high-value cohorts. Scaling meant automating play selection in the CRM, setting guardrails for discounts, and embedding the model into renewal workflows.

Key lessons I always share

If you want, I can provide a template playbook or a sample SQL query set I used to extract features and labels so you can reproduce the 90-day roadmap in your stack.